While searching for some information on Python locks I recently ran across this great post by David Beazley. In it, he explains how the syncronization primitives in the Python standard library are implemented. Basically, Lock is implemented as a binary semaphore in C, and the rest are implemented in pure Python. Even if the post is from 2009 this is still the case. UPDATE: As Antoine Pitrou points out in the comments, starting with CPython 3.2, RLock is now implemented in C.

This got me thinking. As you know I’ve created pyuv, a Python wrapper for libuv, and libuv includes cross-platform implementations for mutexes, semaphores, conditions rwlocks and barriers, which I never bothered to add to pyuv. I just didn’t add them because I thought they didn’t add any value, but after reading David’s article I decided to do a quick test: implement wrappers for a mutex and a condition variable and use them in a Queue implementation in order to see if there was any difference in performance. Not that I ever ran into performance issues related to that, but I was curious anyway 🙂

Someone may think “oh, but given that Python has the GIL, how does using multiple threads and speeding up the locks matter?”. The GIL is released whenever a IO operation is performed, so if your Python application is multithreaded and it mainly deals with IO-bound tasks, they GIL is not that relevant. If your application is CPU bound, however, better have a look at the multiprocessing module.

So, lets get into the code! I implemented Barrier, Condition, Mutex, RWLock and Semaphore in this pyuv branch, which directly wrap their libuv counterpats. Then I copied the Queue implementation from the standard library and used the freshly wrapped synchronization primitives:

[gist]https://gist.github.com/3997233[/gist]

For the testing part, I used the timeit function from IPython with 5 runs. Not sure if it’s the best way, but results suggest it is a good way 🙂 Here is the test script:

[gist]https://gist.github.com/3997244[/gist]

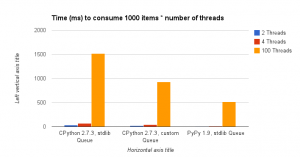

Here are the results:

The tests were run with 2, 4 and 100 threads, and since I was testing performance, I added PyPy to the mix. Now, as you can see in the results, the custom Queue is about 33% faster than the one in the standard library, so I was pretty happy about that. Until I tested PyPy. It just beats the shit out of both, which is awesome 🙂

The performance increase on CPython is nice, there is a downside, however: libuv treats errors in this primitives a bit “abruptly”, it calls abort(). This means that if you use the locks incorrectly your program will core dump. I personally like it, because it helps you find and fix the problem right away, but not everyone may like it.

:wq