I’ve been working on-and-off on this project for almost a year during my free time, and after meditating about it I thought: “fuck it, ship it”. Allow me to introduce Evergreen: cooperative multitasking and i/o for Python.

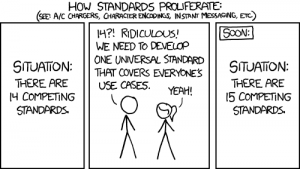

“So, another framework?” I hear you say. Yes, it’s another async framework. But it’s my async framework. I’ve used a number of frameworks for developing servers in Python such as Twisted, Tornado, Eventlet, Gevent and lately Tulip and all of them have great and not so great things, so I decided to blend the ideas I gathered from all of them, add some opinionated decisions, some Stackless flavour and Evergreen was the result.

Evergreeen is a framework which allows developers to write synchronous looking code which is executed asynchronously in a cooperative manner. Evergreen presents an API which looks like the one you would use to write concurrent programs using threads or futures from the Python standard library. The facilities provided by Evergreen are however cooperative, that is, while a task is busy waiting for some i/o other tasks will have their chance to run.

“Show me the code!” I hear you say. Sure, it’s up here on GitHub, released under the MIT license. Since the usual example is a web crawler, here you have one.

Did I mention it supports Python 2 and 3?

“Is it production ready?” I hear you say. It’s still on a very early stage, but I believe the foundation is solid. However, the APIs provided by Evergreen may change a bit until I feel confortable with them. All feedback is welcome, so if you give it a try do let me know!

I’d like to thank all authors of similar libraries for releasing their work as Open Source which I could look into and learn from.

I hope Evergreen can help you solve some problems and you enjoy using it as much as I do developing it.

:wq